The goal of my research is to understand what happens in our brain when we learn something. I have approached this question from four different angles. Currently, we are investigating how the balance of excitation and inhibition that is often observed in cortical neurons is maintained and which effect it has on synaptic plasticity, the putative neurobiological substrate for learning. The second project focuses on how memories can be transferred from one place in the brain to the next. Thirdly, we have tried to understand how we learn to choose our actions such that we maximize the benefit or reward we receive in return. In particular, I am interested in whether reward learning, which is typically implicated in behavioral learning, can be transferred to the sensory domain and describe sensory and perceptual learning. Finally, I have worked extensively on a more abstract learning approach to how our visual system establishes invariant representations of our environment.

Cortical neurons receive a balance of excitatory and inhibitory currents. This balance is thought to be essential for network stability and and has profound effects on the dynamics and response properties of the network. Unfortunately, it also poses a problem for learning and memory, because every change in excitatory connections puts the balance and therefore network stability at risk.

We have recently suggested inhibitory plasticity as a mechanism that establishes the balance by means of self-organization. This mechanism not works robustly both in feedforward and recurrent networks and provides testable predictions for GABAergic synaptic plasticity. The most interesting aspect of the mechanism is that it is self-organized and therefore allows to dynamically maintain the balance in the presence of excitatory plasticity. It therefore allows for the first time to study the effect of the balance on learning and memory. This is what we are currently exploring. This line of research is funded by the German Federal Ministry for Science and Education through the Bernstein Award 2011.

Related publications:

T. Vogels, H. Sprekeler, F. Zenke, Clopath, Gerstner, Science (2011)

The standard approach to modeling behavioral learning is the framework of reinforcement learning. The basic hypothesis is that animals adjust their behavior in order to maximize the resulting payback or reward they receive from the environment. Reinforcement learning is proficient in phenomenologically describing behavioral adaptations, but we are only starting to unravel how our brain is implementing it.

A prominent candidate for a biological substrate of behavioral learning is synaptic plasticity or - more general - neuronal plasticity. We know that plasticity is involved in learning and memory. Moreover, in the last decade, it has become clear that plasticity is regulated by neuromodulatory signals like dopamine or acetylcholine, which are candidate mechanisms for reward signals or awareness and interest.

In this project, we investigated how reinforcement learning can be implemented in biologically realistic networks of spiking neurons. In particular, one might ask if there is an interplay between the learning rules that can be used to maximize reward and the neural code in use in the network. We were also interested whether there are basic requirements, e.g., for synaptic plasticity, to be interpretable as an implementation of reward learning. Finally, we are investigating whether some failures of perceptual learning could be attributed to violations of these requirements.

Related publications:

N. Fremaux, H. Sprekeler, W. Gerstner, Journal of Neuroscience (2010).

H. Sprekeler, G. Hennequin, W. Gerstner, NIPS (2009)

M. H. Herzog, K. C. Aberg, N. Fremaux, W. Gerstner, H. Sprekeler, Vision Research, (accepted in 2011)

We are perpetually facing the need to make decisions. The standard laboratory setting to study decision making is the binary forced choice setting, where a subject is asked to give one of two possible answers given a sensory stimulus. A classical mathematical description of such experiments is the drift-diffusion (DD) model, which is used to describe both the accuracy and the reaction times of the subjects. It assumes an accumulation of evidence until a decision variable reaches one of two decision bounds.

The DD model is mostly tested for stimuli that contain relatively small amounts of information, so that subjects can only make an informed decision by accumulating evidence over time. Most of our daily decision, however, are based on strong stimuli that allow and may even require rapid decisions. Is the DD model also a good description for this setting?

We are investigating this question by providing time-varying stimuli, so that the subjects are following the wrong strategy when averaging over time. This setting allows to investigate the temporal process of evidence integration. Our results show that the time course of evidence integration in rapid decision making is probably not compatible with the DD model and that evidence integration and the actual decision process may be separable.

Related publications:

J. Rüter, H. Sprekeler, W. Gerstner, M. H. Herzog, PLoS One (2012)

J. Rüter, N. Marcille, H. Sprekeler, W. Gerstner, M. H. Herzog, PLoS Comp Biol (2012)

In order to interact with out environment, we have to know its structure. To this end, we are constantly collecting data with our sensory organs. These sensory data are analyzed by our brain in order to extract information like which objects or people surround us or where they are.

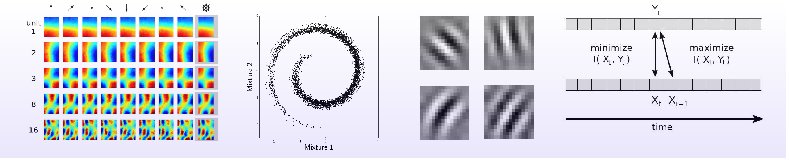

Because we are so good at interpreting the world, we hardly ever realize that this analysis is in fact extremely complicated. This can nicely be illustrated by the example of visual processing. On the level of retinal photoreceptors, the same object can look vastly different depending on its position in our visual field, its distance from the observer and myriad other influences. In fact, the visual impression a particular object might make will never the same. Therefore, in order to recognize the objects that surround us, our brain has to somehow develop a representation of the world that discards all kinds of irrelevant factors, i.e., a representation that is invariant with respect to these factors.

The basic assumption in my research is that these representations are the result of a learning process. One learning principle that has been proposed for learning these representations is the slowness principle. Objects in our surrounding are typically present for a certain period of time. The internal representation of the objects should therefore change on exactly this time scale, whereas the primary sensory stimuli we collect change on a much shorter time scale. Invariant representations can therefore be learned by adjusting our sensory processing such that the internal representation changes slowly in time.

Most of my research on this question was concentrated on Slow Feature Analysis, which is one particular implementation of the slowness principle, but I have also investigated how the idea of slowness could be biologically implemented and how it is related to other learning principles such as predictive coding and Laplacian eigenmaps.

Relevant publications:

H. Sprekeler, Neural Computation (2011)

M. Franzius, H. Sprekeler, L. Wiskott, PLoS Computational Biology (2007)

H. Sprekeler, C. Michaelis, L. Wiskott, PLoS Computational Biology (2007)

H. Sprekeler, L. Wiskott, Neural Computation (2011)

F. Creutzig, H. Sprekeler, Neural Computation (2008)

Details on Slow Feature Analysis can be found on Laurenz Wiskott's

project pages.